Queues

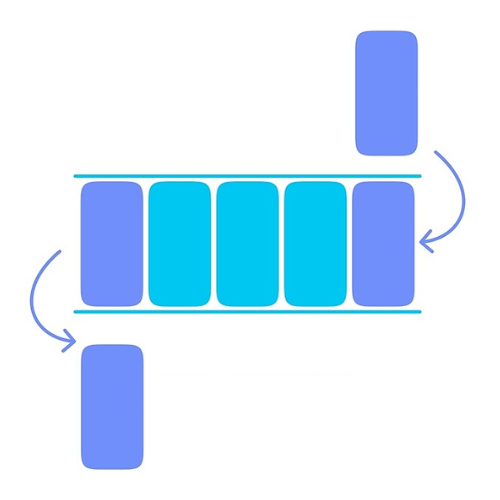

A queue is a fundamental data structure used in system design to manage and process asynchronous tasks or messages. It follows the First-In-First-Out (FIFO) principle, where the first item added to the queue is the first one to be processed or dequeued.

Usage Scenarios

- Task Queue: Managing background tasks or jobs asynchronously, ensuring they are processed in order.

- Message Queue: Facilitating communication between different parts of a distributed system, ensuring reliable message delivery.

- Request Queue: Handling incoming requests in systems where processing might be delayed or requires sequential handling.

Characteristics

- First-In-First-Out (FIFO): Ensures that items are processed in the order they are added.

- Concurrency: Multiple consumers can dequeue items concurrently from the same queue.

- Reliability: Guarantees that messages or tasks are not lost due to system failures.

- Scalability: Queues can handle varying loads by buffering tasks during peak times.

Benefits of Using Queues

- Decoupling: Allows components to communicate asynchronously, reducing dependencies and improving system flexibility.

- Load Balancing: Distributes work evenly across multiple consumers or processing nodes.

- Error Handling: Facilitates retry mechanisms for failed tasks or messages without blocking the system.

- Scalability: Supports horizontal scaling by adding more consumers to process tasks concurrently.

Implementing Queues

- Message Brokers: Kafka, RabbitMQ, ActiveMQ

- Cloud Services: AWS SQS, Azure Queue Storage, Google Cloud Pub/Sub

- Database-backed Queues: Redis, PostgreSQL, MySQL with transactional support

Queues are essential in system design for managing asynchronous tasks, ensuring reliable message delivery, and improving system scalability and fault tolerance. By understanding their principles, characteristics, benefits, and implementation options, developers can effectively integrate queues into their architectures to enhance system performance and reliability.